Background

DocuFlow is a document management platform built by Inspectorio. It is tailored for Supply Chain document management. To build this fast, we built it on top of another service, and this service charges by API calls, which means every API call we make to their server will be our cost.

Problems with the current implementation

Because that external service charges us based on API calls, it’s not a good way to scale bigger. When I joined the team, I could see that we got more and more users on the platform, which meant Inspectorio needed to pay a lot of money for that service. For example, only 1 client could easily consume 25-50% of the API allowance that we have (according to the contract).

Therefore, I initiated a project to find ways to reduce the API calls we make but keep the same user experience. In the long term, it would help us reduce the subscription fee as well as dependency on that service.

Ideation and Solution

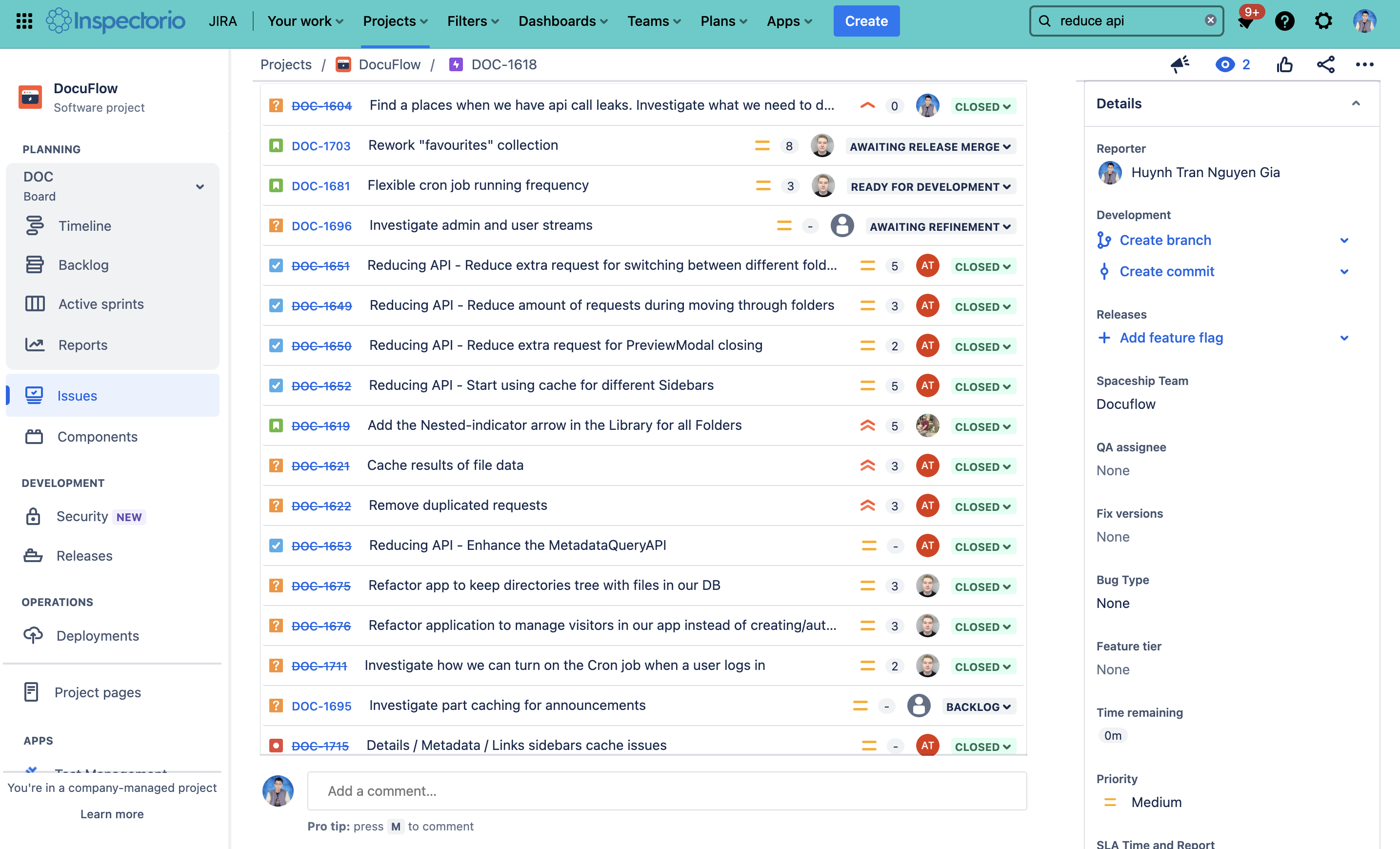

After having meetings/discussions with the team, we found out that there are a few ways we can reduce API consumption. Therefore, I created a new Epic to handle this. In the epic, we had multiple spikes, stories, and tasks to deeply investigate and implement it.

Solution 1: Rebuild the Favourites section

First, we did a small spike to find places that were consuming a high number of API calls. We looked at multiple features and found the Favorite section as a potential candidate.

On the platform, we allow the user to mark a document to the favorite section. Whenever they open the homepage, the system will call multiple APIs to the external service to get data. Because it’s on the home page, it is used by almost all users. That small section on the homepage consumes ~7% of all API calls, which makes it the top 1 candidate.

So we decided to rebuild this section as follows:

- Whenever the user marks a document as favorite, we save that data (document name, file type,…) in our own database

- When the user opens the Home page, instead of calling the API to the external service, we use internal API calls to get the data (which cost 0$)

- We only call API to external service when the user opens the file/folder to preview

With this implementation, we also need to migrate existing data to our database, update some endpoints, and upload request payload.

Solution 2: Flexible cron job running frequency

When I checked the inactive organizations, I realized that they were also using API calls, which is pretty weird. After checking with the team, I understood that those API calls are just updates from the stream, and we did it every 1 minute, even with no updates!

This cron job alone costs us ~30% of all API calls. Because we have many inactive organizations, I believe it would be a big win for us to fix this issue. Therefore, I booked meetings with the team to ideate on how we could use the API more effectively in that specific case.

It turned out not to be a complicated task though. So we agreed to build a flexible cron job running frequency as follows:

- If there is any event (i.e. the user logs in, the user edits a document,…), then the cron job should run every 1 minute for the next 15 minutes

- If there are no user activities for the last 15 minutes, the job will stop and wait for the next event

With this approach, we can still keep the existing experience for the active user, and make sure that no API calls are wasted if there are no users.

Solution 3: Remove logic to the pre-loaded nested folder in the folder tree

Our platform allows the user to navigate by folder tree, which consumes multiple API calls to go through each folder to see if it has nested document (to show the arrow indicator)

Realizing that this UI is using many API calls but doesn’t have much value for the users, I decided to retire it and put the arrow for all folders regardless of its inside content.

We only call API when the user clicks on the arrow to expand the folder. The loaded data will be cached locally so it will not call any API in the next few minutes.

Solution 4: Cache folder and file metadata

Besides documents, metadata is indispensable on our platform (metadata is data related to the document, i.e. document version, applicable market,…). Therefore, whenever the user opens a document, the system has to make many API calls to the external service to retrieve all data points.

As a result of the spike, there were created 4 tickets to reduce the API calls:

- Reduce the amount of requests while moving through folders

- Reduce extra requests for PreviewModal closing

- Reduce extra requests for switching between different folders/files

- Start using cache for different Sidebars

In general, the solution is as follows:

- System caches folder/file responses within one folder. When the user goes deeper or goes back into the folder tree, the cache is invalidated. It means when the user opens any folder and starts selecting (selecting means selecting folder/file to see sidebar info) different folders/files inside, the system doesn't make new requests for folder/file. But when the user opens the child folder or navigates back to the parent folder, the cache is invalidated.

- System caches almost all sidebars responses (except Activity and Assignees sidebars) within one file/folder. When the user selects another file/folder, the cache is invalidated. It means when the user selects any file/folder and starts switching between sidebars, the system adds response data to the cache for a particular sidebar and doesn't make new requests for the sidebar if the sidebar was opened before and the file/folder was not changed. But when the user selects a different file/folder, the cache is invalidated.

Because of the user behavior, we only applied the above mechanism for the users with Admin or User roles. For the users with a Visitor role (view only permission), we only invalidate the cache automatically every 20 minutes - This even reduces the API consumption more.

Outcomes

So were we able to reduce the API call consumption? Definitely yes. Before every release, we check the number of API calls for a list of specific tasks. After the release, we mimicked the same tasks and can see a significant drop in API usage.

Overall, after a month of releasing these enhancements, I can proudly share that we were able to move the API usage to below the limit number (I cannot share it in more detail because of security reasons).